Basics of Training and Optimizing Neural Networks

Topics

This post covers the seventh lecture in the course: “Basics of Optimizing Neural Networks; Classification.”

This is a kitchen sink lecture about optimizing neural nets. The Goodfellow, Bengio and Courville textbook is a very helpful reference. Here are some additional references that provide very useful insights:

Lecture Video

References Cited in Lecture 7: Basics of Optimizing Neural Networks; classification

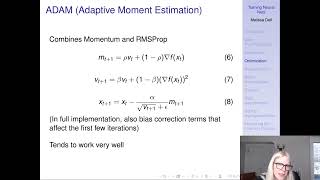

Momentum

- Goh, Gabriel. “Why momentum really works.” Distill 2, no. 4 (2017): e6 (excellent treatment of momentum).

Batch Normalization

-

Ioffe, Sergey, and Christian Szegedy. “Batch normalization: Accelerating deep network training by reducing internal covariate shift.” In International conference on machine learning, pp. 448-456. PMLR, 2015.

-

Santurkar, Shibani, Dimitris Tsipras, Andrew Ilyas, and Aleksander Madry. “How does batch normalization help optimization?.” Advances in Neural Information Processing Systems (2018).

Other Refinements

- He, Tong, Zhi Zhang, Hang Zhang, Zhongyue Zhang, Junyuan Xie, and Mu Li. “Bag of tricks for image classification with convolutional neural networks.” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 558-567. 2019.

Hyperparameter Tuning

-

Li, Hao, Zheng Xu, Gavin Taylor, Christoph Studer, and Tom Goldstein. “Visualizing the loss landscape of neural nets.” Advances in Neural Information Processing Systems (2018).

-

Li, Lisha, Kevin Jamieson, Giulia DeSalvo, Afshin Rostamizadeh, and Ameet Talwalkar. “Hyperband: A novel bandit-based approach to hyperparameter optimization.” The Journal of Machine Learning Research 18, no. 1 (2017): 6765-6816.

-

Falkner, Stefan, Aaron Klein, and Frank Hutter. “BOHB: Robust and efficient hyperparameter optimization at scale.” In International Conference on Machine Learning, pp. 1437-1446. PMLR, 2018.

-

Frankle, Jonathan, and Michael Carbin. “The lottery ticket hypothesis: Finding sparse, trainable neural networks.” arXiv preprint arXiv:1803.03635 (2018).

Other Resources

-

Use Weights & Biases

-

Weights and Biases: Running Hyperparameter Sweeps to Pick the Best Model

-

Weights and Biases: Tune Hyperparameters

-

Weights and Biases: Parameter Importance

Image Source: https://wandb.ai/