Diffusion and GANs

Topics

This post covers the seventeenth lecture in the course: “Diffusion Models and GANs.”

Diffusion models have experienced a meteoric rise since 2021. We will cover them, as well as the models they replaced, Generative Adversarial Networks (GANs), and applications.

Lecture Video

Generative Adversarial Networks

Diffusion Models

References Cited in Lecture 17: Diffusion and GANs

Background on GANs

Goodfellow, Ian J., Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. “Generative adversarial networks.” arXiv preprint arXiv:1406.2661 (2014).

Goodfellow, Ian. “Nips 2016 tutorial: Generative adversarial networks.” arXiv preprint arXiv:1701.00160 (2016).

Gulrajani, I., Ahmed, F., Arjovsky, M., Dumoulin, V., & Courville, A. C. (2017). Improved training of wasserstein gans. Advances in neural information processing systems, 30.

Reed, S., Akata, Z., Yan, X., Logeswaran, L., Schiele, B., & Lee, H. (2016, June). Generative adversarial text to image synthesis. In International conference on machine learning (pp. 1060- 1069). PMLR.

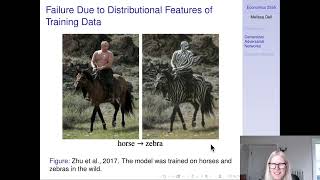

Zhu, Jun-Yan, Taesung Park, Phillip Isola, and Alexei A. Efros. “Unpaired image-to-image translation using cycle-consistent adversarial networks.” In Proceedings of the IEEE international conference on computer vision, pp. 2223-2232. 2017.

Karras, Tero, Samuli Laine, Miika Aittala, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. “Analyzing and improving the image quality of stylegan.” In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 8110-8119. 2020.

Alaluf, Yuval, Or Patashnik, and Daniel Cohen-Or. “Restyle: A residual-based stylegan encoder via iterative refinement.” In Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 6711-6720. 2021.

Cleaning Up Dirty Scanned Documents with Deep Learning (Blog Post)

Blog Posts on Diffusion

The Illustrated Stable Diffusion

Generative Modeling by Estimating Gradients of the Data Distribution

Diffusion models are autoencoders

Code Bases

Diffusion Papers

Nichol, Alexander Quinn, and Prafulla Dhariwal. “Improved denoising diffusion probabilistic models.” In International Conference on Machine Learning, pp. 8162-8171. PMLR, 2021. Dhariwal, Prafulla, and Alexander Nichol. “Diffusion models beat gans on image synthesis.” Advances in Neural Information Processing Systems 34 (2021): 8780-8794. (see also https://www.youtube.com/watch?v=W-O7AZNzbzQ )

Kwon, Gihyun, and Jong Chul Ye. “Diffusion-based image translation using disentangled style and content representation.” arXiv preprint arXiv:2209.15264 (2022).

Cao, Hanqun, Cheng Tan, Zhangyang Gao, Guangyong Chen, Pheng-Ann Heng, and Stan Z. Li. “A survey on generative diffusion model.” arXiv preprint arXiv:2209.02646 (2022).

Bansal, Arpit, et al. “Cold diffusion: Inverting arbitrary image transforms without noise.” arXiv preprint arXiv:2208.09392 (2022).

Rombach, Robin, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Björn Ommer. “High-resolution image synthesis with latent diffusion models.” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10684-10695. 2022.

Peebles, William, and Saining Xie. “Scalable Diffusion Models with Transformers.” arXiv preprint arXiv:2212.09748 (2022). (DiT)

Handwriting Generation

Davis, Brian, Chris Tensmeyer, Brian Price, Curtis Wigington, Bryan Morse, and Rajiv Jain. “Text and style conditioned gan for generation of offline handwriting lines.” arXiv preprint arXiv:2009.00678 (2020).

Bhunia, Ankan Kumar, Salman Khan, Hisham Cholakkal, Rao Muhammad Anwer, Fahad Shahbaz Khan, and Mubarak Shah. “Handwriting transformers.” In Proceedings of the IEEE/CVF international conference on computer vision, pp. 1086-1094. 2021. (See also https://colab.research.google.com/github/ankanbhunia/Handwriting-Transformers/blob/main/demo.ipynb )

Image Source: https://developer.nvidia.com/blog/improving-diffusion-models-as-an-alternative-to-gans-part-1/